Inaport 7.3 – Dramatically improving matching performance using local cache tables

With the rise of CRM systems such as Microsoft CRM 2011 which are accessed via web services, performance becomes more of an issue. Analysis of Inaport projects indicates that for a typical import into a web hosted CRM system, up to 99% of the time may be spent in the web service calls to the target CRM system.

An important objective, then, is to minimize the number of web services calls as far as possible. This post describes some techniques available in Inaport 7.3 that can dramatically reduce or eliminate the cost of matching on the target system, by removing the need to make any web services calls.

While these techniques have the biggest impact with web services based systems, they can also be very useful for on premise based systems such as SalesLogix.

It is a long and moderately technical post. A subsequent post will provide some examples of using these caching techniques. If you have any questions, it is likely that other readers do as well – feel free to ask for clarification in the comments, or to contact InaPlex direct.

The basic technique is caching the primary key of the target records in the source database. This can be done by one of:

- updating the source record with the primary key of the matched record in the target;

- using a cross reference table, that stores the primary keys of the source and target records, along with match information;

- building a match table that stores the primary key of the target, along with required match information.

Each of these techniques is useful in different scenarios.

Overview of Matching

Before we go into the details of the caching techniques, a brief refresher on matching in general. If you are already an expert, skip ahead.

Matching is the process of making sure:

- the incoming record does not already exist (so prevent duplicates), or

- finding the correct record in the target to be updated.

For example, the incoming company name may be ‘Acme Inc’. In the target system we may have ‘Acme Inc’ and ‘Acme Widgets’. The matching process needs to ensure that ‘Acme Inc’ is updated and ‘Acme Widgets’ is not touched.

Inaport provides several methods for implementing matching.

Standard Matching

The simplest and in some ways the most powerful is Standard Matching. This allows you to build an expression from one or more fields, and then compare the results to identify the correct match.

In the example above, we could just compare the company name to the company name. Standard matching allows us to use expressions as well; for example the normcomp() function can be used to normalize a company name by removing punctuation and noise words such as ‘Inc’, ‘Incorporated’ etc. This would mean that ‘Acme’, ‘Acme Inc’ and ‘Acme, Incorporated’ would all match. The screen shot below shows how you might set up standard matching using the normcomp() function.

Standard matching is good for use with web services APIs – a small number of calls are made to download the required match data, and then all matching in done in memory on the local system. Even here though, the process of downloading the match data can take considerable time for a large system – we need to do better.

Fuzzy Matching

Fuzzy Matching is implemented in the same way as Standard matching – an in memory index is built by downloading the relevant fields from the target system. The difference is that Fuzzy Matching allows string similarity scoring. In the screen shot below, see how variations on ‘Acme Inc’ have been scored.

SQL Matching

In a conventional import with a SQL based target system, we can switch to using SQL Matching. SQL matching allows us to do a SQL query against the target system for each incoming record. This has the benefit of no upfront cost for building the in memory index, but does have a cost to do at least one query for each match required. For a web services based system, this is a very substantial performance cost.

Demonstration Setup

For the purposes of this demonstration, we will work with a sample company table in the source data as shown below:

Notice that this table has a company ID field (integer primary key) and a field called ‘crm_id’. When the record is created or updated in CRM, this field will be updated with the ID of the record in CRM.

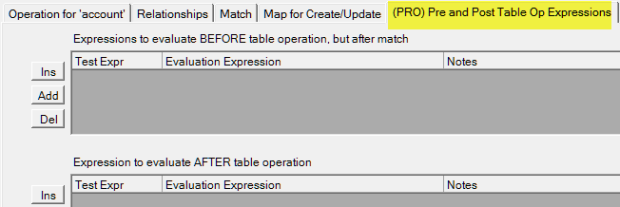

These techniques rely on using the Pre and Post table Op Expression tab on the table setup in Inaport. This tab allows us to defines expressions that get executed either:

- before the target table is modified (Pre Op), or

- after the table is modified (Post Op)

Here is a screen shot of the tab:

Pre/Post Sub-Tab

Method One: Updating a Source Table

The first technique we will discuss is updating the source table with the ID of the target record.

The match being used is the normcomp() expression on both the source and the target company name fields:

Match – Using normcomp() function

In the Post Op tab, we can update the source record using a dbupdate() statement, combined with the pk() function.

The pk() function returns the primary key of the record just created or updated in the target system. In this case, the record is being inserted into the account table of a Microsoft CRM 2011 system, so the primary key will be a GUID.

The the expression being used to update the source table is:

This dbupdate() expression updates the source table with the value of the primary key just created (using the pk() function). The where clause is used to identify the correct record in the source table to update, using the value of the ID in the current source record.

Here are the results:

You can see that the crm_id field has now been updated with GUID values from MSCRM.

NOTE: As the the update is updating the same table we are reading for the source, there is potential for table locking (depending on your source query). You may want to add a NOLOCK table hint to your query to prevent this:

select * from company with (nolock)

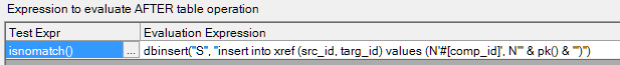

Method Two: Using a Cross Reference table

Updating the source table will often require a modification to the schema of the table – adding a new column. This can be avoided by adding a cross reference table to the source database, which can hold both the source primary key and the primary key for the target.

If we are mapping multiple entities to the target, the cross reference table can also hold an entity type. This can occur, for example, when doing a migration – you will be mapping company, contact, history, and other entities to the target. The cross reference table can hold details for all of them.

To add data to the table we use a post-operation function, similar to the example above. Now, however, we need to insert data into the table rather than updating it, and we need to include the source and target primary keys. See screen shot for an example, and the results below.

Insert into local cache table

Updated local cache table

In this example, the insert statement does not update the src_type and targ_type fields, but that would be easy to add. Please note that this is just a model of a local cache table – you are free to design one that meets your particular requirements.

Method Three: Building a Match Table

The third option to be considered is building a local match table. This is effectively a combination of update local and using a cross reference table.

The basic idea is to copy the required match data from the target system into a local table and use it for matching. It can also have the source and target ids added to it, so it can be used as a cache table as well.

This technique is valuable when the match criteria are complex, and for systems other than web services based. For example, SalesLogix and SageCRM store address information in a separate address table. If we need to match on both company and and postal code, for example, it can be tricky.

Building a match table normally requires a separate profile to read the data from CRM and insert into the local table. Once built, the real profile uses the table for matching.

For this example, we use the following query in an Inaport to get the match data from CRM:

select accountid, name, address1_postalcode from account

which gets this data from CRM:

Match data query results

Inaport is then used to populated the local match table, with the following results:

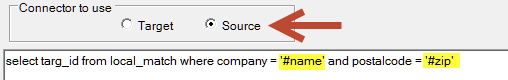

This pre-processing gives us a match table in the local database that can now be used by Inaport. The SQL match criteria in Inaport might be:

Inaport SQL for local match

There are a couple of points to note here:

- The source connector has been specified; this causes Inaport to run the SQL against the source instead of the target

- The source field names have been incorporated directly into the SQL – Inaport will replace the names with the values at run time.

There is another optimization available.

If your source SQL is able to include the target primary key – for example by doing a join between the data table and the match table – then you can replace the SQL query for matching with just the name of the field that holds the target id.

CONCLUSION

This has been a long and moderately technical post. A subsequent post will provide some examples of using these caching techniques.

If you have any questions in the interim, feel free to ask for clarification in the comments, or to contact InaPlex direct.

Hi David,

good post!

And nice to see the Post Table Operations in action 😉

Greetings from Berlin

Marco

Thanks David,

Very useful insight into best practices for using Inaport.

Thanks again!

Hi David,

Perefect!

Thanks for the post.

Regards

Uwe

Really good and helpful. I am pleased to say I have used some of the techniques but not all. The post table op method is great and efficient – I wish I had started using it earlier.

One other related technique which I have used with a hosted MSCRM system was to read back whole entities into a local SQL database using Inaport prior to running some complex SQL then having SQL queries write data back. This is only safe if you prevent other users from changing. It was useful when doing things such as merging records. It was surpisingly fast.

Paul